Mastering how to spot a fake review has become the single most vital skill in the digital economy. The reliance on collective experience, distilled into star ratings and testimonial text, has created a profound Digital Trust Crisis. Reviews, once a genuine source of community feedback, are now the most valuable currency in the marketplace. Whether selecting a neighborhood bistro or investing in cutting-edge electronics, consumers defer to the Aggregate Rating as a presumed objective measure of quality. However, this deterministic power over commercial success has made reviews the primary target of market manipulation. The uncontrolled proliferation of fraud is not a minor inconvenience; it is a systemic threat that distorts market signals and erodes the fundamental trust between buyers and sellers, demanding that every participant acquire robust Internet Literacy.

In an era defined by information overload, the critical ability to filter, classify, and authenticate information is paramount. We define this essential modern competency as Internet Literacy—a survival skill indispensable for discerning verifiable facts from rumors, and distinguishing genuine consumer experience from deliberate deceit. Within this framework, the ability to recognize and reject fraudulent reviews is not just advisable; it is a critical, non-negotiable skill.

When a consumer accepts and acts upon a fake review, they become an unwitting financial supporter of an unethical business model, simultaneously penalizing honest merchants who invest in quality and integrity. The overwhelming evidence from countless reports of buyer’s remorse confirms a harsh lesson: vast numbers of consumers have purchased inferior products solely because they placed their faith in the rating alone, neglecting the vital step of critical analysis. This dependence on a single metric has left consumers highly vulnerable to organized deception.

The necessity of developing this specialized literacy is underscored by alarming statistics. Industry studies estimate that billions of dollars are lost annually due to consumers being misled by fraudulent assessments. Fake reviews do more than just facilitate poor purchases; they corrupt the very data set that shapes the perception of value and quality. This article serves as a comprehensive educational tool, moving beyond anecdotal evidence to provide a structured, analytical framework for detection.

Our overarching goal is threefold: first, to illuminate the current reality of the fake review ecosystem from the perspectives of both the vulnerable consumer and the victimized merchant; second, to provide a structured, seven-point checklist of Red Flags based on linguistic, temporal, and behavioral anomalies; and third, to offer practical, real-world strategies and tools for detection and countermeasures.

The following sections will dissect the market pathology, moving from the broad consequences of manipulation to the minute, forensic details necessary to unmask the fraudsters. By the conclusion of this guide, the reader will possess the necessary skepticism and technical insight to navigate the volatile landscape of online feedback, reasserting control over their purchasing decisions and contributing to a more transparent marketplace. This is a call to arms for digital citizenship, transforming passive consumers into active, discerning judges of market information. We aim to convert the current climate of consumer vulnerability into one of empowered, data-driven discernment, making the cost of perpetrating fake review schemes prohibitive for all unethical actors.

The Dual Impact of Fraud: A Crisis for Consumers and Merchants

The proliferation of fake reviews has established a devastating feedback loop, equally damaging to the bottom line of ethical businesses and the wallet of the trusting consumer. This section provides a detailed forensic analysis of the crisis from two critical vantage points: the beleaguered merchant and the deceived buyer.

The crisis for businesses is fundamentally one of reputational terrorism. Merchants, particularly small and medium-sized enterprises (SMEs), frequently encounter sophisticated and damaging attacks in the form of unsubstantiated one-star reviews. These ratings are weaponized, deployed by unscrupulous competitors or highly aggressive former customers seeking retribution. The attacks are often characterized by their deliberate vagueness, making them difficult to challenge. The reviewer offers a low rating but provides no actionable context, transactional evidence, or specific complaint. Illustrative Example: A boutique digital marketing agency receives a flurry of one-star ratings on Google Maps. The text for one such review is simply: “Service wasn’t good.” This review lacks any detail regarding the project, the date of engagement, or the name of the staff member involved. This strategic ambiguity is intentional; it is designed to cause maximum damage while providing minimum evidence for the merchant to refute the claim. The lack of detail immediately flags the review as suspicious to an informed observer but poses a significant challenge to the platform’s moderation system.

These baseless attacks exact a heavy toll. Studies show that a single one-star drop in a business’s Yelp or Google rating can correlate with a significant percentage decrease in revenue. For small businesses, this can be catastrophic. Furthermore, the emotional cost is immense; honest owners are forced to dedicate invaluable time and resources to forensic investigations—cross-referencing customer lists, checking call logs, and pleading with platform support—just to maintain their integrity. A major impediment is the reluctance of large platforms to act aggressively. Their moderation teams often operate on strict, black-and-white policies. Reviews are typically only removed if they contain clear violations such as hate speech, harassment, or explicit derogatory language. The challenge lies in proving that a review is fake when the text itself is neutral, albeit baseless. The platform’s standard reply is often a variant of: “The review does not violate our policies or Terms & Conditions, so we can’t help you.” This systemic failure leaves businesses in a state of constant vulnerability.

For the consumer, the danger lies in the inherent human tendency toward reliance on simple metrics. The convenience of a high star rating masks the complex manipulation occurring beneath the surface. Consumers are vulnerable because they often treat the Aggregate Rating as an immutable score, failing to account for the possibility that the score itself has been strategically poisoned. This reliance easily leads to the purchase of subpar or dangerous products. Illustrative Example (The Headphone Trap): A consumer researches a pair of noise-canceling headphones, noting its highly persuasive 4.8-star rating derived from over 1,000 reviews. Based on this metric alone, the consumer makes the purchase. Upon use, they discover the crucial feature—battery life—lasts only two hours, not the advertised six. Upon closer inspection, forensic analysis reveals that a high percentage of the positive reviews were posted by disposable accounts using generic, promotional language. The consumer’s regret is compounded by the realization that their money has supported the very scam that misled them.

The continuous erosion of trust has wider economic consequences. When consumers realize they cannot trust the primary sources of market information, they begin to disengage, leading to review fatigue and a preference for well-established, legacy brands, stifling innovation from new entrants who genuinely rely on positive, organic feedback to compete. Furthermore, the necessity of buying third-party analytical tools to verify reviews adds an unnecessary “tax on skepticism” to the consumer experience. The crisis transforms the market from a meritocracy based on product quality into a digital battlefield where the winner is the one most adept at deception.

Forensic Analysis: Categorizing Fraud and Detecting the First Red Flags

The defense against fraudulent reviews requires moving beyond generalized suspicion to a structured, forensic analysis. This section establishes the critical taxonomy of fake reviews and introduces the first three, most powerful behavioral and technical indicators of deceit, which act as immediate red flags for both consumers and merchants.

Understanding the motivation—the ‘why’—is crucial for identifying the ‘how’ a review is constructed. Fake reviews predominantly originate from two distinct, sophisticated architectures: Non-Customer Reviews (The Attacker) and Self-Serving Reviews (The Promoter/Saboteur). The Non-Customer Review involves an individual deliberately posting a rating and/or text commentary about a product or service without ever having been a verifiable customer or user. These accounts bypass internal platform verification checks through various means, often leveraging fake identities or hijacked accounts. The primary objective is malicious sabotage or retribution, often funded by competitors engaging in “review bombing,” a rapid deployment of low ratings to drastically lower a rival’s aggregate score. Illustrative Example: A user posts a one-star review for “Salon B” on Yelp or Google Maps. However, when the salon checks its appointment and payment records over the last year, there is no evidence of this reviewer ever having booked or paid for a service. This immediate disconnect between the reviewer and the transaction confirms the malicious intent and non-customer status.

Conversely, the Self-Serving Review involves individuals or organized groups—including the product sellers themselves, paid affiliates, or third-party reputation agencies—who review or rate products without objective usage. Their content is crafted solely to promote their own brand or systematically attack a competitor’s. This is a clear propaganda effort. The content is often written to mimic promotional copy rather than genuine user experience, or designed to launch a highly focused attack on a specific rival’s product features. Illustrative Example: A technology blogger or affiliate reviewer gives a five-star rating to “Product A” from “Brand X” immediately upon its release, using language that is excessively promotional and lacks critical depth. Contrast this with established, independent reviewers who require a minimum of one week to test core battery life, performance, and durability before publishing a credible review. The speed and hyper-positive language signal a self-serving transaction.

These two forms of fraud necessitate a set of rigorous checks. The following forensic flags allow consumers to adopt a technical mindset, transforming raw data into actionable intelligence.

The Seven Critical Red Flags

The first three flags are rooted in technical, temporal, and behavioral anomalies that are difficult for fraudsters to disguise consistently.

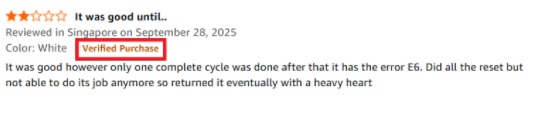

1. The Missing “Verified Purchase” Status.

The “Verified Purchase” badge is the most fundamental technical assurance offered by major e-commerce platforms. It confirms the review originated from an account that successfully completed a purchase of the specific product being reviewed. The Red Flag: The absence of the “Verified Purchase” label when the product is readily available through the platform is a major indicator of potential fraud. Fraudulent accounts often obtain the product through third-party means (e.g., direct shipment from the manufacturer, competitor exchange) or are simply posting the review text without ever possessing the item. A review lacking this tag should be viewed with significantly elevated scrutiny. Warning Example: A sprawling, 500-word, five-star review detailing the product’s “life-changing” quality has no “Verified Purchase” tag. This extensive, positive narrative, decoupled from transactional evidence, is far more suspicious than a short, neutrally-phrased three-star review with the badge, as the latter at least confirms the user had skin in the game.

2. Suspicious Timeline Clustering (The “Review Bomb”).

Authentic consumer feedback is inherently organic and distributed over time, reflecting natural purchasing cycles. Fraudulent reviews, conversely, are often deployed in highly concentrated, artificial bursts. The Red Flag: A large volume of reviews (either all five-star or all one-star) suddenly appears within a narrow 24- to 72-hour period, entirely disconnected from any significant promotional event. This timeline clustering is the signature of automated scripts or manual teams hired to execute a “review bomb.” Warning Example: For a product that has been on the market for two years, receiving 30 five-star reviews in 48 hours is statistically anomalous. This method is used to quickly manipulate the aggregate rating before platform algorithms can detect the unusual behavioral pattern.

3. Reviewer Profile Monotony (Behavioral Forensics).

A genuine reviewer is a human consumer with diverse interests; their profile should reflect a varied history of purchases and ratings across different categories. Fraudulent profiles are specialized tools designed for a single purpose. The Red Flag: The reviewer’s profile contains only one single review for the product in question, or their entire history is devoted to either extreme praise or targeted negative attacks. A profile used only once is highly suspicious, often being a disposable account created solely for the fraud. If the profile’s history shows concentrated negative activity targeting only one competitor’s brand, this strongly suggests a coordinated, financially motivated attack originating from the rival’s camp. Warning Example: A user named “David M.” posts a single one-star review for “Store A.” Further investigation reveals the “David M.” account was created exactly two days prior to posting the review and has no other activity, pointing directly to a manufactured identity for a specific act of sabotage.

Linguistic Forensics: Exposing Manipulation Through Language and Specificity

Building upon the behavioral and temporal checks established in the previous section, the final four critical red flags delve into the linguistic DNA of the review itself. Fraudsters can mask their identity and time their attacks, but they often struggle to fabricate the subtle, complex language patterns of genuine human experience. These linguistic forensic checks are often the most powerful tools available to the discerning consumer.

4. Linguistic Echoes (Templated Language and Repetition).

Authentic feedback is unique to the individual’s experience, resulting in diverse vocabulary and sentence structure. When reviews are generated by centralized fraud operations—whether through human “review farms” or automated scripts—they rely on templated language to maximize efficiency. This results in unnatural linguistic similarities that defy statistical probability. The Red Flag: Multiple, distinct user accounts employ identical phrases, standardized sentence structures, or highly specific, often hyperbolic, keywords. These language patterns are typically derived from the seller’s own promotional materials, making the review sound more like marketing copy than a personal endorsement. Warning Example: Across ten different five-star reviews for the same product, the reader repeatedly encounters the exact phrase, “this gadget is an absolute game-changer” or “worth every penny, truly marvelous.” This kind of verbatim repetition across unrelated user profiles is a clear indication that the content was generated from a single source, a script supplied to multiple paid accounts. Furthermore, advanced linguistic algorithms look for the absence of first-person pronouns (I, me, my) in positive reviews, or the unnatural clustering of adverbs and verbs, which sophisticated textual analysis can expose as machine-generated or highly compensated human writing. The psychological effect of repetition is powerful, but forensically, it is a catastrophic operational flaw for the fraudster.

5. Exaggerated Emotional Polarization (The Hyperbolic Trap).

Genuine human experience is nuanced, and even a “good” purchase often involves minor criticisms (e.g., “Great product, but the instructions were confusing”). Fraudulent reviews, however, often live at the extreme poles of emotional expression. The Red Flag: The review utilizes excessively dramatic, highly emotional, or hyperbolic language that lacks any measured balance. This is especially true when the review uses all-caps, multiple exclamation points, and a large number of subjective adverbs. Warning Example (Hyper-Positive): “This product is the BEST THING TO EVER HAPPEN TO ME! I literally cannot live without it now!” Warning Example (Hyper-Negative): “This is the WORST FRAUDULENT PRODUCT ON EARTH! Do not buy this unless you want your entire life ruined!” These statements often substitute vague, intense emotion for concrete facts. The fraudster aims to manipulate the reader’s fear or desire instantly. Conversely, a truly authentic negative review often expresses disappointment by focusing on a specific, functional failure (e.g., “The main selling point, the battery life, failed after three weeks”), rather than resorting to personal attacks or catastrophic predictions.

6. Generalized Language and Lack of Specificity.

A genuine reviewer possesses a sensory memory of the product—they know its texture, its smell, its interface, and the exact date they started using it. Fraudsters, especially those posting Non-Customer Reviews, lack this foundational experiential data. The Red Flag: The review uses vague, ambiguous, and general language that praises or condemns the product without describing any specific feature, material quality, or usage scenario. Warning Example (The Vague Compliment): A five-star review states: “Everything was perfect, I love it! Great customer support.” Notice the absence of verifiable detail: it doesn’t mention the material (Is the plastic durable? Is the screen bright?), any specific feature, or the date of customer interaction. This contrasts sharply with an authentic review: “I love the new matte black finish, but the camera shutter button feels sticky after two weeks of use.” The specificity proves ownership. Fraudsters consciously avoid details because providing them creates a verifiable weakness that could expose their deception.

7. Missing Disclosure in Social Media Reviews (The Regulatory Bypass).

The review landscape has expanded far beyond retailer websites into social media, where influencer marketing reigns. Regulatory bodies, such as the FTC in the United States, mandate that any financial, employment, or personal relationship between a reviewer and a brand must be clearly and conspicuously disclosed. The Red Flag: An influencer or affiliate marketer praises a product in a video, story, or blog post but fails to include the necessary #Ad, #Sponsored, or similar disclosure tags. This falls under the category of a Self-Serving Review (The Promoter) that bypasses ethical and legal standards. Warning Example: A fashion influencer posts a detailed “day in the life” video heavily featuring a new brand of sustainable sneakers, praising their comfort and style, but omits any mention of receiving the product for free or being paid for the promotion. The review is structurally deceptive, aiming to pass off compensated endorsement as genuine, unsolicited enthusiasm. Consumers must treat any review—regardless of the platform—that fails to clearly disclose a partnership with extreme caution.

These seven critical checks provide a robust analytical framework. By combining the behavioral and temporal checks (Flags 1-3) with these detailed linguistic and disclosure checks (Flags 4-7), the consumer is equipped to filter out the noise and focus only on the signal of genuine customer experience. The next and final section will explore the specialized tools and counter-strategies available to both consumers and businesses to formalize this defense.

The Counterattack

Having established the seven critical flags for detecting fraudulent reviews, the final crucial step is to formalize a defense strategy. This involves deploying specialized third-party tools for immediate analysis and mastering professional counter-strategies for businesses facing attack. The final defense against digital deception is a formalized action plan.

Specialized Tools and Strategic Countermeasures

The sheer volume of online feedback makes manual, review-by-review analysis impractical for most consumers and businesses. Fortunately, a class of specialized third-party analytical tools has emerged to provide algorithmic assistance, acting as forensic filters for review data.

A. Analysis Tools for the Savvy Consumer

These tools leverage sophisticated machine learning and linguistic models to automatically assess review integrity, often scoring products based on the percentage of likely fraudulent reviews:

- Service Application and Mechanism: Services like ReviewMeta and Fakespot (primarily focused on Amazon) operate by analyzing vast datasets of reviewer behavior. They look for patterns invisible to the naked eye, such as cross-platform review activity, the average posting rate of an account, the number of reviews posted in a single session, and the linguistic diversity within a user’s entire history.

- Actionable Output: These services generate an adjusted, more accurate rating for a product by eliminating reviews flagged as suspicious. Illustrative Example: A Bluetooth speaker boasts an advertised 4.7-star rating from 5,000 reviews on Amazon. After running the data through ReviewMeta, the adjusted, “legit” rating drops to 3.9 stars, indicating that a significant majority of the five-star reviews exhibited tell-tale signs of template use or temporal clustering. By using these tools, consumers effectively outsource the heavy lifting of algorithmic forensic analysis.

While incredibly powerful, consumers must remember these tools are guides, not definitive courts of law; they highlight patterns of suspicion that warrant closer human examination. Integrating a quick tool-check before any major purchase is now an indispensable step in Internet Literacy.

B. Professional Counter-Strategy for Businesses

When facing a targeted “review bomb” or individual Non-Customer Reviews, a business’s primary goal shifts from removal to damage mitigation and public defense.

- Clear Reporting and Documentation: The first action is always to follow the platform’s protocol, but with heightened precision. When reporting suspicious activity on platforms like Google My Business (GMB), businesses should “Flag the review as conflict of interest.” This signals to the platform that the review may be an act of sabotage from a competitor, a claim the business can often substantiate through market evidence. Illustrative Example: A business must submit detailed proof, not just a complaint. They should include screenshots of the reviewer’s profile, noting the lack of other activity (Monotony Flag), and provide internal customer logs proving the reviewer’s name does not correspond to any past client or transaction.

- The Public, Professional Response (Damage Control): Since platforms often refuse to remove fake reviews, the review remains visible. The business must respond publicly and politely, turning the attack into a demonstration of professionalism. The response should achieve two goals: (a) Offer a resolution, and (b) subtly expose the reviewer’s fraud to future readers. Illustrative Example: The perfect public response template is: “Hello [Reviewer Name], we sincerely regret your negative experience. However, we cannot locate any record of your purchase or appointment in our system under this name or date. We are ready to resolve your issue immediately; please contact us directly at [business email or phone number] so we can verify the details and assist you.” This response publicly casts doubt on the reviewer’s legitimacy while simultaneously appearing helpful and attentive to customer service, safeguarding the business’s reputation for future potential customers.

- The Proactive Defense: The most effective defense against sporadic fake reviews is drowning them out. Businesses must actively encourage genuine, authentic reviews from their loyal customer base to ensure the Aggregate Rating is accurate and that the fake reviews are relegated to the bottom of the feed.

Conclusion

The era of blindly trusting star ratings is over. The sophistication of Self-Serving Reviews (propaganda) and Non-Customer Reviews (sabotage) demands that the consumer become an active participant in digital forensics.

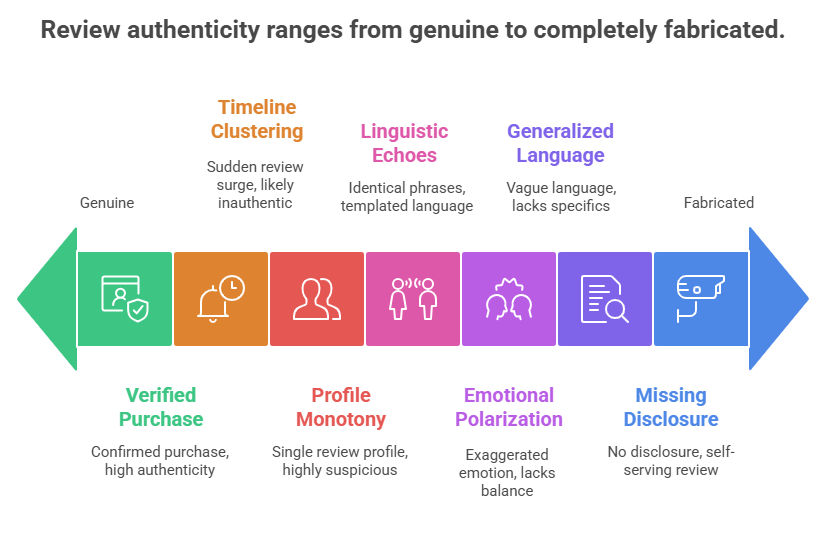

We have established that the key to self-defense lies in recognizing the Behavioral Anomalies (Verified Purchase status, Timeline Clustering, Profile Monotony) and the Linguistic Anomalies (Templated Language, Emotional Polarization, Lack of Specificity, and Missing Disclosure). This systematic approach replaces passive reliance on numerical scores with informed, critical analysis.

The ultimate goal of Internet Literacy is empowerment. By applying this 7-Point Checklist and utilizing available analysis tools, you are not merely filtering out noise; you are asserting control over your purchasing decisions, rewarding honest merchants, and punishing fraudulent behavior.

We urge every reader to maintain a healthy, critical skepticism before clicking “Buy.” Never again let a simple, vague five-star rating dictate a major purchase. Use the tools, observe the language, check the source, and ensure that your money supports the integrity and quality that genuine reviews are meant to represent. The future of the transparent marketplace depends on the vigilance of the consumer.